How do you interact with a Large Language Model? And how do you integrate its knowledge inside a real-life application, for example a bot, a translation system, or something similar? In this short video I show how easy it is to interact with a Large Language Model (LLM), in this case the famous GPT-3, using the command line.

GPT-3 is a generative model because it is able to produce novel texts based on a prompt, i.e. a description of the requested task. Generative models are so good, especially in the fluidity and naturalness of their texts, that their output is generally not distinguishable from human generated texts, at least by untrained evaluators (see here an interesting study on evaluation). Generative models are the BIG thing in AI in 2022. Every months new models or new improved versions are released. And every time it is a WoW effect.

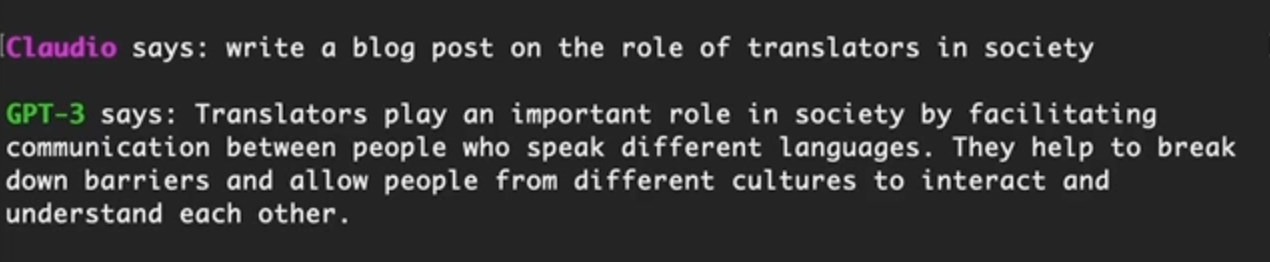

For this video, I wrote a simple command line interface. It allows me to access GPT-3 comfortably and interactively from my computer. Once this is possible, you can even integrate it in larger applications. Anybody with a couple of hours of python practice can use it. You can download it from my GitHub account.

Disclaimer: if you only want to experiment with the model, you do not need this script. Simply go to the GTP-3 webpage and use their user-friendly UI.

I asked the model to perform some advanced linguistic actions. In particular:

- write a short blog post about the role of translation in society

- write 10 tweets about how to become a translator

- translate a sentence between 2 languages

It is worth understanding what Large Language Models really are, how they work, and what their limitations are in the face of these amazing results. Let’s have a look.

The most interesting thing to know about language models is that they are not software programmed to do anything specific. Rather, they are a mathematical representation of language which is learned by absorbing an incredible amount of text in one or more languages. A mathematical representation means that the language model is – in principle – nothing more than word statistics. So, it essentially works on the surface of the language without knowing what those words really mean in the real world. This is a subtle distinction.

Large Language Models are complex systems and complex systems can exhibit emergent behaviors, i.e. something that is a non-obvious new ability that was not intentionally taught to the model. For example, many think that consciousness is an emergent phenomenon (epiphenomenon) of the biological activities that take place in the human brain, i.e. the brain has not been ‘programmed’ to generated consciousness (there are many good introductory books on consciousness, for example Seth‘s one). Similarly, LLM demonstrates capabilities beyond what you would expect given the data they were trained on. To be clear, while LLMs are fascinating, they are (now) not conscious (read this article about a Google scientist who claims a bot is sentient). Yet they demonstrate skills that they were not directly trained to learn. That’s remarkable.

Take the ability to translate: Unlike traditional machine translation engines, which are specifically trained to perform this task using parallel data, i.e. texts and their translations, LLMs are not explicitly trained for this type of activity. However, if you are asked to do a translation, the translation is simply done (see my video).

Even more interesting, LLMs don’t expect clear commands to perform this task. Instead, they use what we call prompts. Prompts are not codified commands like you would expect for traditional software. They’re rather task expressed in natural language, and there aren’t even clear restrictions on how you can express them.

While the results shown in the video are remarkable in terms of text quality and adequacy, there is no doubt that LLM has many limitations. Since language models do not really know much about the real world, they can be factually incorrect (say things that don’t make sense and are potentially harmful, see for example the infamous message Alexa said to a kid). They can uncover issues like gender and racial bias, use of inappropriate language, etc. For these reasons, an ‘unfiltered’ use of such systems is not possible or recommended. Their output needs to be filtered by computational means (see for example how Cicero, the META’s latest AI system that achieves spectacular results in the game of Diplomacy, uses filters to avoid nonsensical dialogue, or how the latest ChatGTP answers followup questions, admits its mistakes, challenges incorrect premises, and rejects inappropriate requests) or by human-in-the loop editing (see this article on the augmented copy editor).

The usefulness of Large Language Models lies in the ability to integrate them into complex applications to perform complex tasks involving languages. Many applications are still waiting to be invented.

This is an article adapted from EasyAI, a project aiming at explaining AI to the humanities (translators, interpreters, journalists, etc.) in a discursive and simple way. Webpage: https://easyai.uni-mainz.de