Most of the time live communication takes place using both verbal and non-verbal means which are adjusted to the situational needs and communicative objectives of the interlocutors. This obviously plays a crucial role also in multilingual communication and in machine interpretation.

For example, typical verbal means can be the so-called topicalization, i.e. positioning the most salient part of the information at the beginning of a sentence to convey specific meaning.

Example: A sentence like I won’t eat that pizza can be transformed in That pizza I won’t eat to stress, for example, that you won’t eat that specific pizza, but you will eat the one next to it, possibility expressing a certain level of disgust for the first one (it may have pineapples!).

As for the non-verbal means, speakers may look at what they refer to improve listeners’ comprehension.

Example: If someone remarks That is really big looking at a specific object, the listener will immediately know what “that” is, maybe a piece of cake. In the absence of visual context, one might be left wondering about the reference. What is meant by “that”? In the context of multilingual communication, a literal translation might suffice, or it might miss the mark. With widened eyes and raised eyebrows suggesting astonishment, it becomes clear that the individual is conveying shock or amazement, rather then asking for an explanation of what a particular object is.

I’ve previously explored sentiment analysis from videos, highlighting the potential for such visual cues, for example facial expression or body language to inform more nuanced translations. Similarly, knowing the objects in a room, the way people are dressed, even to the detail of the color of their dress, the fact that they wear glasses or no, and so forth, might become important information to better discern the meaning of what people are saying. In other words, vision plays an important role when it comes to live translation. And while communicating without visual clues is certainly possible, it is clear that some aspects of communication will be compromised.

One of the biggest challenges of machine interpreting is exactly this: its inability to perceive visual impits. Machine interpreting is unimodal. To make sense of the situation, translation decisions solely rest on linguistic cues, and overlook other important layers of communication, such as visual stimuli. Neural Machine Translation (NMT), for example, is unimodal. Larger language models, an important evolution in machine translation, are unimodal too. While they introduce some level of contextual reasoning, as I elaborated in my post on Situational Awareness in Machine Interpreting, potentially improving translation quality over NMT through contextualization, they inherently remain dependent only on the language input.

What if we could now add visual information to the translation process?

The leap towards vision enhanced machine interpreting might be just around the corner (which means some year from now). Newly released vision systems combined with large language models boast an impressive capability to dissect images, with live video analysis being a step away, and are now able to transform visual data into what I name, for lack of a better term, situational meta-information (i.e. what we see). This can be harnessed to enrich the translation process, achieving higher levels of quality and precision.

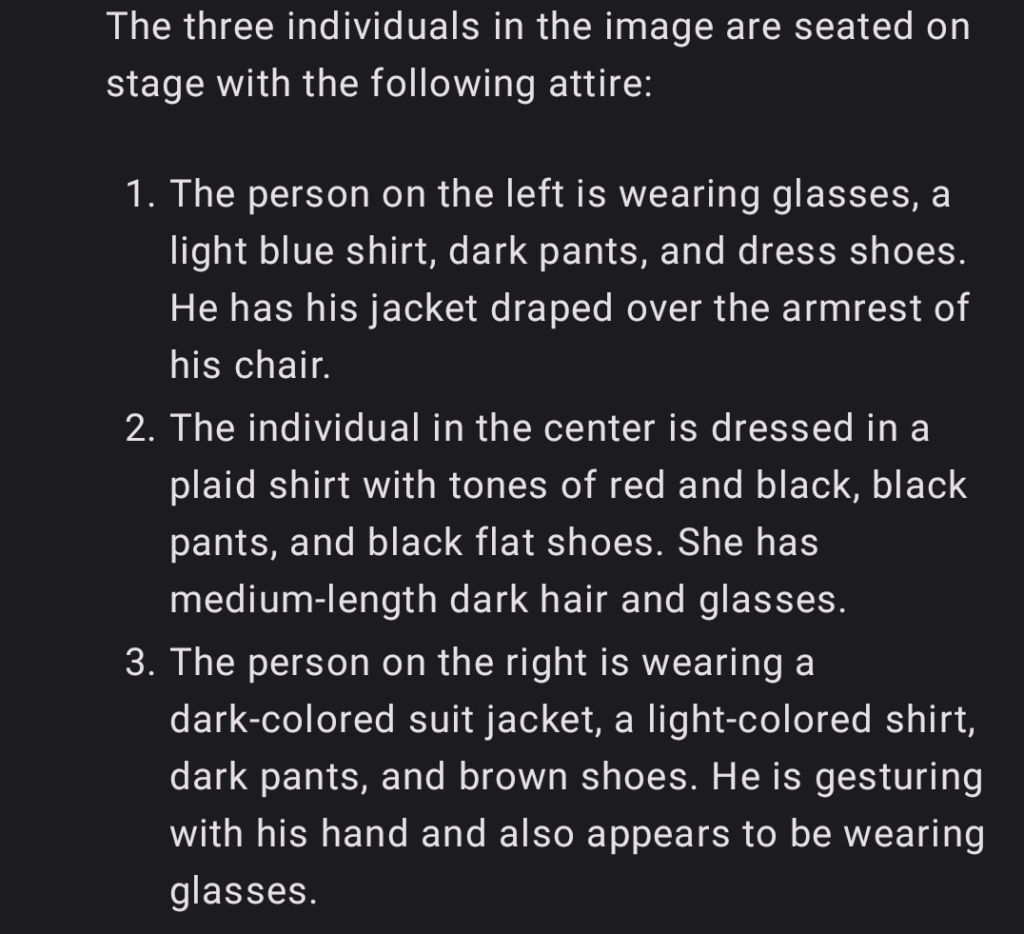

Let’s examine what insights we can glean today from this image (Fig. 1), which can be captured from a real-time video feed. The scene depicts a typical stage setting. For example, we’d want to generate situational meta-information about the three speakers, their attire, and other relevant details to aid the translation process. When appropriately prompted, a large language model, like GPT-4, combined with a vision system, can provide the descriptions in Fig. 2.

The depth and granularity of the description are striking. The kind of information that can be retrieved and the level of detail can be adjusted based on specific needs. There’s a plethora of information that could be extracted. Not only information about the people as in the example, but also details about the setting, information on the posters in the background etc. What for? For instance, consider the level of formality that the venue might dictate. If we know this information, we can adapt the formality of the translation accordingly.

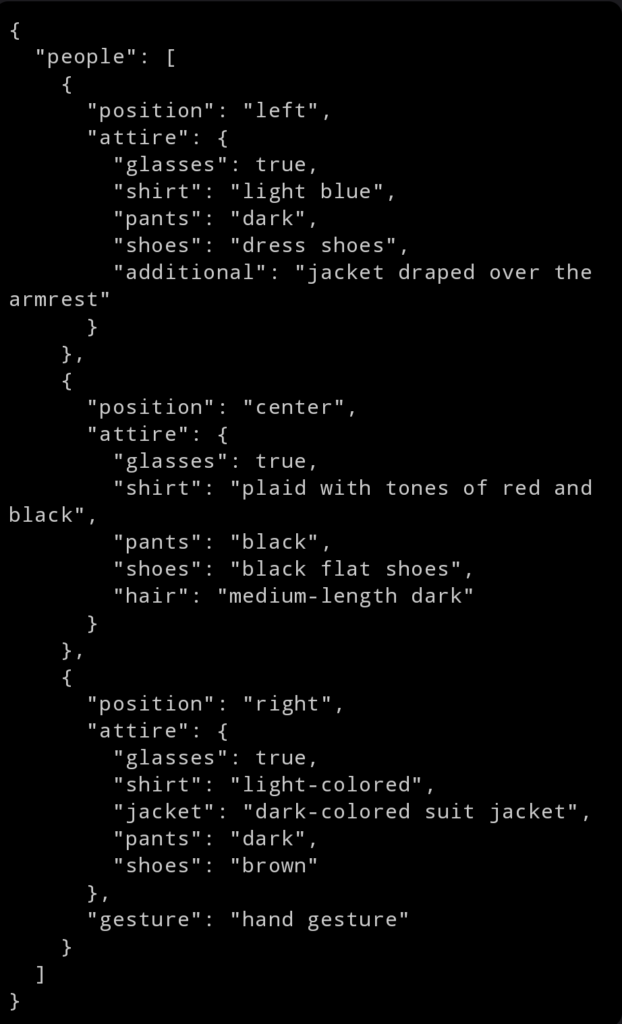

To make this information computer-friendly, especially for a Large Language Model tasked with translation, we need to structure this data systematically. Well structured meta-information about the situation can than be used in the translation process by means of frame and scene prompting. Fortunately, structuring data in a computer-friendly way is straightforward. With some iterations of prompting, we can derive a nice structure of the extracted metadata, as illustrated in Fig. 3.

The crux of this approach is that we’ve achieved – fully automatically – a detailed understanding of the scene, in this example the speakers’ attire. When performing speech translation, this data can be leveraged to refine translation decisions.

Such an enhancement of speech translation is powerful. This is primarily because it emulates the innate process humans undergo—whether consciously or subconsciously—every day during interpersonal communication: triangulating multiple source of information to understand the meaning of what people are saying. In other words, it helps to ground the translation process in the reality of the communicative setting.

The path forward is still long and there are many unknowns, but again the trajectory seem clear. Speech translation systems might grow exponentially in complexity. But multilingual communication is indeed complex. If you want to achieve high quality translation, we will need to go into this path of complexity (at least until scaling or new approaches will – maybe – solve quality issues in translation themselves).

Try it out: you can try Lava, an open-sourced version of a multimodal model, here.